Type: Individual Project

Timeline: 3 Months

Topic: AI & Mental Health

Research: Survey & Testing

Design: UI/UX & Hardware

Eng: Arduino Prototyping

Figma

Arduino

Python

Why does "perfect" advice leave us feeling alone?

Users increasingly turn to AI for comfort, receiving responses that are logically sound and emotionally empathetic. Yet many users still feel alone after the conversation ends.

Goal

To reframe AI emotional support from conversation to embodied co-regulation of the nervous system.

Nino shifts emotional support from words to physical experience.

Instead of encouraging longer conversations, it helps users return to their bodies through rhythm, touch, and steady cues.

The system has two parts:

Tangible Interface: A haptic phone case that uses slow, rhythmic vibrations to bring the user’s attention back to the body.

AI Agent: A simple reasoning system that notices when users are stuck in their thoughts and decides when physical grounding should begin.

Together, these parts create a continuous loop. The system notices mental overload, then responds with physical cues, so support is felt in the body rather than just understood in the mind.

I began with a personal contradiction. I often turned to AI for comfort, and the conversations helped. Yet once they ended, I was left with a quiet sense of emptiness.

This experience made me wonder whether the feeling was unique to me.

To see whether this experience was shared, I surveyed 152 young adults who regularly use AI for emotional support. I focused not on what people felt during the conversation, but on how they felt after it ended.

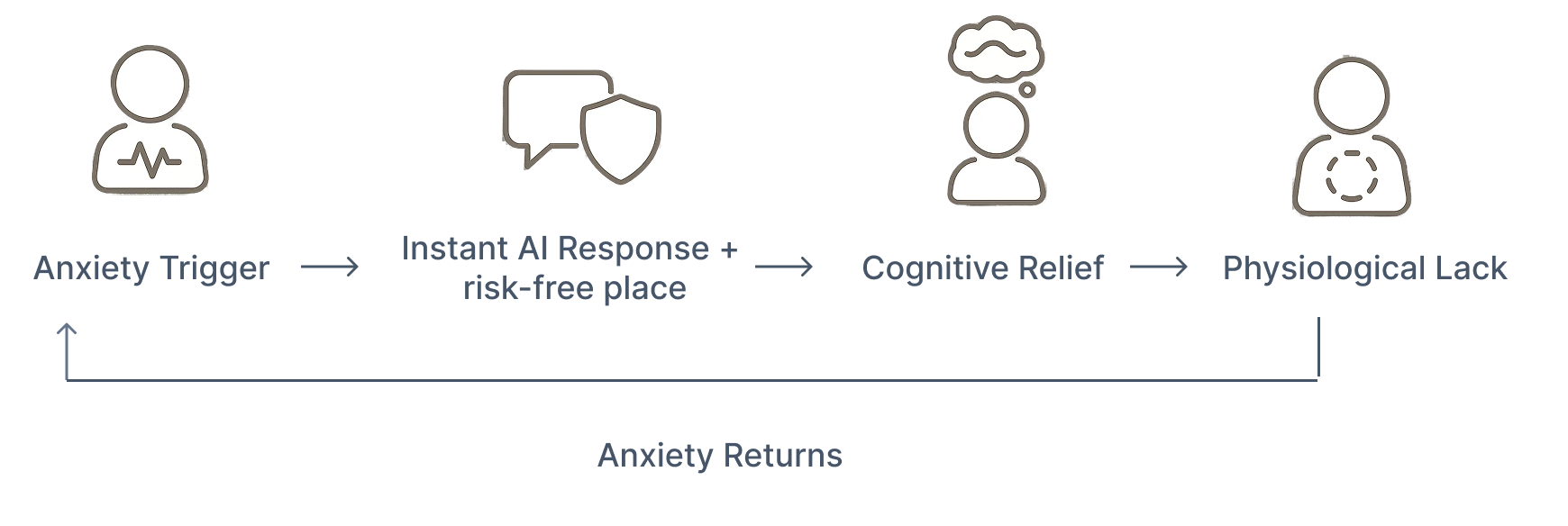

High cognitive satisfaction fails to prevent physiological isolation.

.png)

The funnel revealed a consistent pattern: AI effectively supports emotional disclosure and cognitive relief, yet often fails to create a felt sense of presence.

However, the funnel alone could not explain why this gap persists.

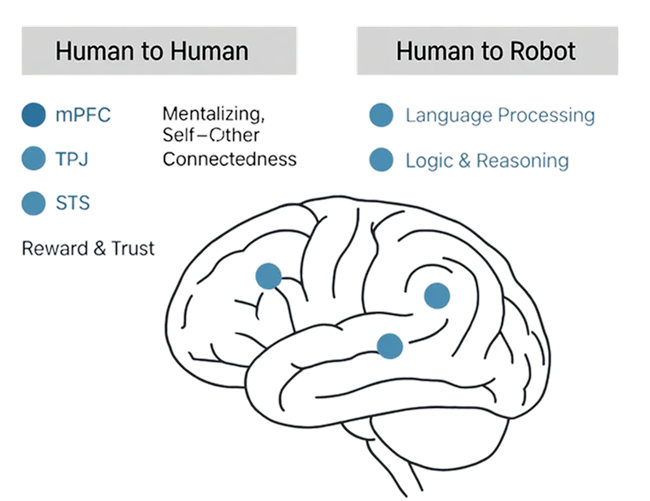

To understand why AI support can feel emotionally incomplete, I reviewed prior research on how humans experience presence in interaction.

Across neuroscience, affective psychology, and HCI, a consistent insight emerged: feeling supported is not driven by language alone.

In human-to-human interaction, presence arises from embodied and social systems related to trust, safety, and co-regulation.

By contrast, AI interactions primarily engage language and logical reasoning, often failing to activate the physiological systems that make people feel accompanied.

Human-to-human interaction activates the brain’s reward and social-connection networks, while AI conversations primarily engage language and logical-processing regions.

Research helps explain this paradox.

AI offers instant, judgment-free responses that produce short-term cognitive relief. However, without sustained physiological grounding, the calming effect fades quickly, prompting users to re-enter the cycle.

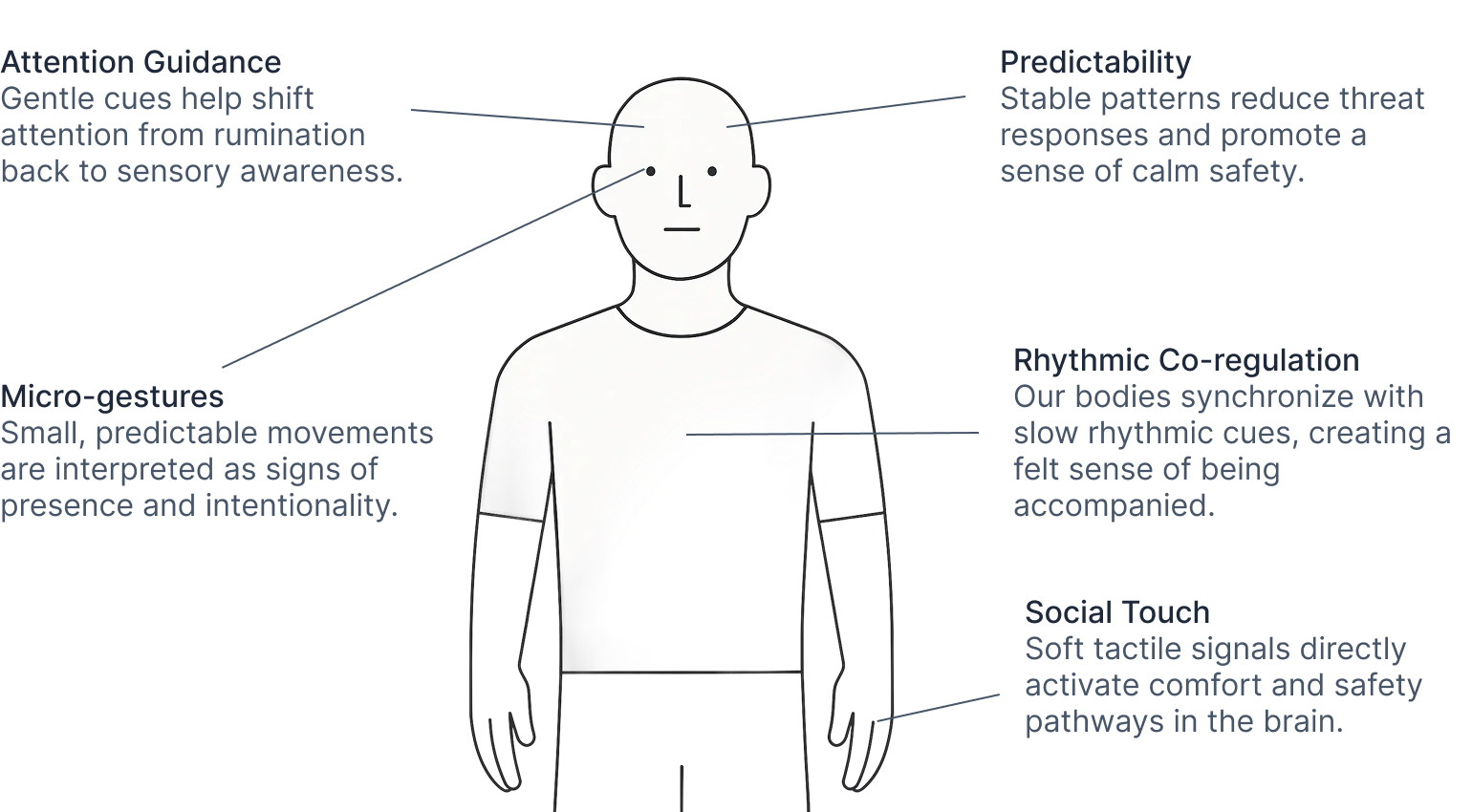

Prior work suggests emotional presence emerges from non-verbal, embodied cues rather than conversation alone. Rhythm, predictability, micro-movements, and gentle touch activate neural pathways associated with safety and connection, allowing the nervous system to settle.

Together, these findings suggest a clear implication: to reduce emotional distance, AI companions must move beyond cognitive reassurance and incorporate sensory and physiological feedback that supports bodily regulation.

01: Presence is Physical

Felt presence relies on sensory synchrony, not just verbal empathy.

→ Design should incorporate sensory or physiological cues the body can feel

02: Availability Breeds Dependency

Instant, frictionless access creates compulsive dopamine loops.

→ Intentional Friction Introduce "soft boundaries" and pacing delays to shift users from reactive consumption to grounded pause.

03: Anxiety is Cognitive; Calm is Somatic

Pure conversation often feeds rumination rather than stopping it.

→ Interoceptive Anchoring Proactively redirect attention from "thinking about the problem" to "feeling the body."

Through this research, I realized that emotional support fails when it remains abstract. To bridge this gap, I had to move beyond the screen and design for the nervous system.

Al can talk to the mind, but only the body knows when it feels safe.

This insight led me to design Nino not as a chatbot, but as an embodied companion, one that breathes, pulses, and responds to guide users back into physical presence.

Since users interact with AI primarily through their phones, the phone case becomes the natural place to introduce felt companionship. This led to a soft, organism-like shell that can breathe, pulse, and gesture gently in response to the user.

A slow, living rhythm that the body can follow, easing breath and heartbeat through pace, not instruction.

Minimal, abstract gaze cues that signal presence without demanding attention.

Soft, low-intensity haptics that anchor attention in the hands and body.

I built a functional prototype that integrates sensing, light, haptics, and display within a soft, manufacturable shell. The internal layout was designed to preserve tactile softness while maintaining the stability of embedded electronics.

A simple embedded setup built around an ESP32, combining light, haptic, and display modules to support rhythmic pacing and gaze-based interaction.

Internal CAD layout showing PCB mounting, light chambers, and haptic placement.

Full-scale prototype printed in flexible photopolymer resin for tactile and light diffusion testing.

Nino retains the reasoning ability of an LLM, but fundamentally shifts its role. Rather than extending conversation, language serves as a gentle bridge that guides attention away from the screen and back into the body.

.png)

Excerpt from the actual system prompt used in user testing.

.png)

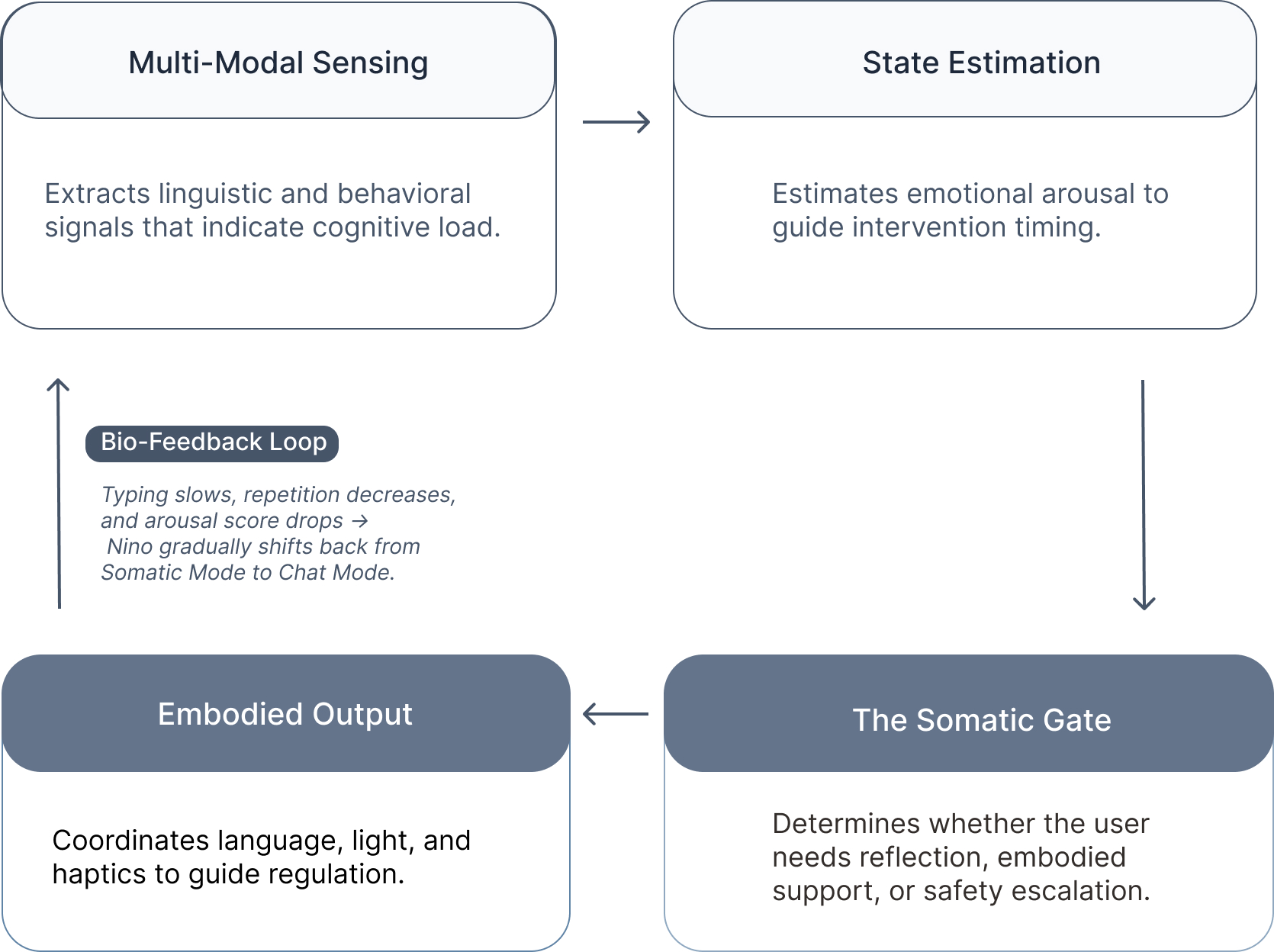

This logic pipeline was iterated through 13 rounds of user testing. Rather than optimizing for conversation length, it prioritizes nervous system regulation by deciding when to respond with language and when to intervene through embodied cues.

When emotional arousal rises, cognitive processing becomes less effective. Grounded in principles from developmental psychology, Nino responds by shifting from language to sensory cues such as light, rhythm, and gaze to support regulation without relying on verbal reasoning.

Updated every 60s to maintain physiological stability

Supports acute anxiety through slow rhythm, warmth, and steady gaze.

Disrupts rumination using bilateral cues that redirect attention outward.

Encourages gentle connection through warmth and micro-movement.

Maintains a quiet sense of presence even when idle.

I conducted user testing sessions to evaluate comfort, perceived presence, and the transition from cognitive to somatic support.

01: Physical comfort and integration

The enclosure still felt slightly firm in extended use, and the breathing light sometimes interfered with phone interaction.

→ Future iterations should further soften contact surfaces and refine component placement.

02: Presence felt, but modes blurred

Users reported a strong sense of companionship, but some found the emotional differences between modes unclear despite visible changes.

→ Mode transitions need clearer affective distinction, not just perceptual variation.

03: Timing of cognitive to somatic shift

While users valued bodily grounding, the system occasionally initiated somatic cues before users felt ready to leave cognitive reflection.

→ More adaptive timing is needed to respect user intent.

This project was both exciting and challenging for me. The hardest part was working with emotion, which is complex and invisible by nature. During user testing, I learned that people differ widely in when they want to shift from thinking to bodily guidance, and in how they respond to Nino’s tone. There was no single moment or response that felt right for everyone. I went through thirteen iterations, and each round revealed a new edge case.

Through this process, I realized how deeply interested I am in understanding human emotion and designing systems around it. While Nino is only a starting point, this project confirmed that I want to keep exploring how data, interaction design, and emotional insight can come together to create more grounded and human support.